Jenkins

Jenkins Best Practices: How to Automate Deployments with Jenkins

Jenkins is really popular for CI (build automation), as well as for general-purpose automation. But I’ve learned the hard way that there are a lot of pitfalls when it comes to deployment automation.

For one thing, deployment automation can get very complicated very quick, and mistakes can have big consequences. Failed production deployments, downtimes, wasted time, frustration, or even security breaches have a major negative impact on every part of the organization.

In this article, we’ll look at the best — and worst — practices for deployment automation with Jenkins. First, we’ll cover what you’re actually deploying (build automation) and where you store it before deployment (artifact management). Finally, we’ll dive into deployment automation itself.

⚙ Build Automation (CI)

Jenkins was built for CI, and it’s well-respected for this functionality. However, if you’re new to the world of automation, note that there are many alternatives, and you should consider all the options to find the solution that best meets your organizational needs.

Regardless of what tool you use, CI is a fairly standardized process.

First are source control changes. Whether you’re using Git, Subversion, or something else, and whether a developer commits (checks-in) code changes to a branch or to the main trunk, the build automation process starts here — Unless you’re operating in the medieval era of coding, you’re using SOME version of source control.

Then comes the automated build. Whether manually triggered by someone clicking “build” or automatically triggered by the change, this is a script that gets (checks-out) the code from source control. It often runs one or more tools to…

- Download library packages that the code requires (NuGet, Maven, etc.)

- Compile the code (MSBuild, javac, etc.)

- Perform unit tests on the code (VSTest, JUnit, etc.)

Nearly all CI tools work with the most popular source control systems and most popular tools. Jenkins has by far the most plugins and can “do it all.”

- Source Control: Git, Subversion, Mercurial, CVS, P4, PlasticSCM, AccuRev, ClearCase UCM, Rally, Visual SourceSafe, StarTeam, Seapine Surround SCM, Bazaar, Harvest SCM, SourceGear Vault, BitKeeper, and okay I’m tired of listing and linking—but trust me that there are a LOT more (or you can search your favorite SCM via https://plugins.jenkins.io/).

- Build Tools: Literally over 500! Way too many to list, but you can browse/search them here: https://plugins.jenkins.io/ui/search/?categories=buildManagement

📦 Build Artifacts and Packages

CI was originally intended to test code and provide testing feedback to developers as they coded. A release engineer would then create a “release” build using a different tool and process altogether. But this was duplicative, slow, and error-prone, as different processes produce different results.

This is where “build artifacts” come in, which:

- allow you to use the exact same code that was compiled and passed testing

- are just files like .exe and .dll that are “attached” to a build in the CI system

- can be downloaded as a zip file and used for deployment.

All CI servers support artifacts.

How to Create Artifacts in Jenkins

Jenkins uses classic CI terminology, “archive artifacts.” In a Freestyle project, just add a Post-build step called “Archive the artifacts,” and in a Pipeline project, you can use the archiveArtifacts Step. These archived files will be accessible from the Jenkins webpage so that they can be downloaded later.

⚠ Anti-pattern: Jenkins as an Artifact Repository

Jenkins was not designed as an artifact repository, especially one that was intended for release-quality builds that you intend to deploy. Jenkins keeps artifacts for a build only as long as a build log itself is kept. This means a few things:

- Artifacts that you deployed to a server may be deleted.

- There is no easy way to roll back if artifacts are deleted.

- It’s unclear which Jenkins builds were deployed.

On top of that, Jenkins artifacts are associated with builds in projects (jobs) in an instance. This means that:

⚠ Renaming/recreating projects means your artifacts are gone.

⚠ Many instances of Jenkins are common, so there can be many (usually too many) places to look for artifacts when you need them.

The better option is to use a centralized artifact repository or packages.

✔ Alternative: Maven-based Artifact Repository

Maven helps Java developers maintain their Java-based applications by introducing “projects” that organize:

- Codefiles

- Build scripts to run compiler tools

- Version numbers for compiled code

- Dependency management that lets one project reference a version of another project.

Maven projects are published to “Maven Repositories,” which are essentially like a web-based file share. There are “index” files in a Maven repository that list what projects and versions are stored in the repository, as well as metadata files that describe what each project is.

How to Publish to a Maven-based Artifact Repository in Jenkins

- First, you need to use Maven for your project. An intro to Maven is way beyond the scope of this article. If you’re in need of an intro/not already using Maven, it might not be the best choice, as it has a bit of a learning curve.

- Set up a Maven-based repository. Your options are free and open-source Apache Archiva or a commercial option with a free option like JFrog, SonaType, or ProGet are commercial but all have free options (obviously I’m biased towards ProGet).

- If you can publish to this repository from your workstation, then you should have no problem with Jenkins. Install the Maven plugin in Jenkins. This will add a “Build” section to your projects where you can specify exactly what to execute.

✔ Alternative: Universal Packages

An alternative to Maven-based artifacts is using universal packages, and it can be a much simpler option.

Unlike using Maven-based artifacts, no modification to your project or code is required. What does this mean? Jenkins and Maven-based artifacts are a collection of files that can be any type (like .jar., .war, .dll, .rpm, .zip, .jpg, etc.), and it’s left to you to version these files and come up with naming rules and conventions for your software releases. Like share drives, they’re really easy to use, and you can add, move, or delete files as you want. But they can get messy, and make for an auditing nightmare because it’s hard to know where anything is.

Universal Packages have a standards-defined format and are a single file that contains all of your build artifacts, as well as a manifest file that has the package’s name and version. Unlike artifacts, packages are essentially “read-only,” are Semantically Versioned, and have built-in metadata that context on who did what and when with the package, which simplifies two of the most stressful dev events: auditing and rollbacks.

Universal packages help you uniformly distribute your applications and components. Packages are built once and deployed consistently across environments. Because of this, you can be certain that what does to production is exactly what was tested.

How to Publish a Universal Package Feed in Jenkins

For this example, we’ll use ProGet.

- First, download and install ProGet on Windows or Linux.

- In ProGet, set up a Universal Feed and an API key

- Next, in Jenkins, install the ProGet plug-in and add the steps to your Jenkins project.

- This gives you a “ProGet Upload Package” for Freestyle Jobs and the `uploadProgetPackage` Pipeline step

The Jenkins ProGet plugin page has a detailed step-by-step guide. We also have our own guide on how to configure Jenkins and ProGet to publish and deploy universal packages.

Whew! I know that was a long road to get to automated deployments, but you can see how understanding what you’re trying to deploy helps illuminate the best way to deploy it.

🚀 Automated Deployment

Deployment can mean a lot of things for different applications, like web applications with lots of files, images, etc.; microservices that are deployed as containers; or blue/green deployment to ensure zero downtime.

But deployment can also mean simply copying the files that you build to a drop folder for another team (or a customer) to use. If this is all you’re doing, this is really easy to do in Jenkins.

How to Automate Deployment of Jenkins Artifacts

If you need to copy on your network, there are two popular plugins:

- ArtifactDeployer, which “enables you to archive build artifacts to any remote locations such as to a separate file server”

- CopyArtifact is primarily used to copy artifacts between projects, but it has a “target” parameter that can be used to copy to a folder

ArtifactDeployer is more purposely built for deploying, but it hasn’t been updated in about a year. While CopyArtifact is not designed for this exact purpose, it is more popular and is more frequently updated.

There are also plugins to deploy to popular locations:

- Deploy artifacts to Amazon’s cloud storage (AWS S3)

- Deploy artifacts to Azure’s Blob storage

- Deploy using FTP

Warning! Make sure to use the Publish Over FTP plugin, NOT FTP Publisher. FTP Publisher has vulnerabilities and is not frequently maintained (I’m not even going to link to it because I really don’t want you to use it).

Using Jenkins to automate deployment beyond simply copying artifacts to other file servers or the cloud is not recommended. Let me explain why.

⚠ Anti-pattern: Scripting Your Own Deployments with Jenkins

Jenkins is an all-purpose automation tool that was designed for Continuous Integration. It can run scripts, which means it can do anything you can script, including deployment.

But this means YOU have to script deployment, and there’s a LOT to deployments.

- Large, monolithic applications have thousands of files that can take a long time to deploy. They require starting and stopping components at just the right time, are a bit quirky, and sometimes need a retry.

- Microservices are often distributed to many servers; relatively easy to deploy, but often need to deploy several at the same time; and there are often lots and lots of microservices, often with slightly different ways to deploy because they’re developed/improved over many years

✔ Alternative: Use a Tool that Can Directly Deploy from Jenkins

There are a few tools that can integrate with and deploy directly from Jenkins. Most require that you use Jenkins to publish to an artifact repository or package feed first, and then deploy from that.

BuildMaster is one of these tools, and it directly communicates with Jenkins. BuildMaster will import artifacts directly from Jenkins and then handle all of the deployment complexity. It can also automatically queue builds in Jenkins, which means you can have BuildMaster use Jenkins behind the scenes. You can learn all about this integration on our Docs page.

✔ Alternative: Deploy from Your Maven Repository or Package Feed

This adds complexity to the process, but it makes sense if you want to use a central repository for your build artifacts. (There are a lot of commercial and open-source tools that can do this.)

This makes sense when you want to have other tools (alternative CI servers for example) publish to this central location; when you have artifacts or packages distributed to many locations for edge-based deployment; or if you need to create a “no-man’s land” between the build and deployment tool.

BuildMaster and ProGet can also do this, and offer the added benefits of:

- Repackaging: To indicate package quality while maintaining immutability

- Package Promotion: To ensure only approved and verified packages are used in the correct environments

- Deployment Records: To have a package-centered view of deployments to help with auditing and rollbacks

- Package Usage Scanning: To tell you where which packages are installed

Obviously, I’m biased towards our products, but doing CD tasks with the right tools, like BuildMaster, makes a serious difference in the quality you’ll get from just Jenkins alone.

🏆 Next Steps: Continuous Delivery

In this article, I’ve gone through the gritty details of deployments and how they happen in Jenkins. The fact is that doing anything more than copying artifacts is pretty much beyond the scope of Jenkins.

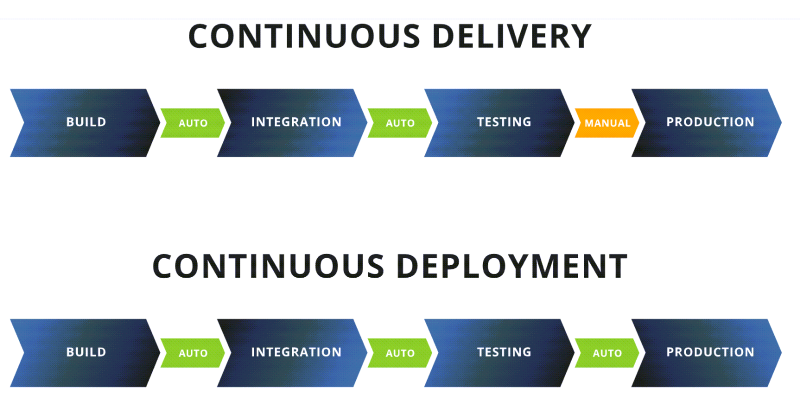

This is extra true if you want to branch out to Continuous Delivery. I have a whole article about how Jenkins Pipelines are NOT actual CD pipelines. Because they’re not true CD pipelines, Jenkins Pipelines lack essential CD functionality like approval gates.

And because Jenkins is tough even for relative experts, integrating stakeholders into the CI/CD process using Jenkins is a lot of work or even impossible. Don’t do all the work of onboarding CI/CD only to be hamstrung by using the wrong tools! Find out more from our free eBook “Level Up Your CI/CD with Jenkins“. Sign up for your free copy today!